Disclaimer: This post includes Amazon affiliate links. Clicking on them earns me a commission and does not affect the final price.

Hello 👋 It’s that time of the year again! Another minor version of Go was released, superseding Go 1.20, and released a few days ago on August 8th; this is Go 1.21! 💥 🎉 🎊

“Go 1.21 is released!” by @elibendersky — https://t.co/053bb3R7Zw#golang

— Go (@golang) August 8, 2023

Updating

Depending on the platforms you’re using for development and production, you may already be able to use Go 1.21; if not, the official download page provides pre-packaged versions for different platforms.

For more concrete examples:

- MacOS Homebew,

- Official Go 1.21 Docker Images

- Debian Bookworm

docker pull golang:1.21.0-bookworm - Alpine 3.18:

docker pull golang:1.21.0-alpine3.18

- Debian Bookworm

- Github Actions.

What is new?

Code for all the examples are available on Github.

There are a lot of nice new changes added to Go 1.21. The following is a list of eight features to call out, I encourage you to look at the Release Notes to get familiar with other changes that could be relevant to your existing use cases. I bet you will find something interesting.

1. Backward language compatibility

Code for this example is available on Github.

Since the release of Go 1.0, the language has emphasized its backwards compatibility calling it the Go 1 compatibility promise, this promise includes explicitly the specification of the language and the APIs defined in the standard library, meaning code that you write using the old versions of Go will still compile successfully in newer versions but does not necessarily satisfy the behavior implemented in recent versions when fixing bugs.

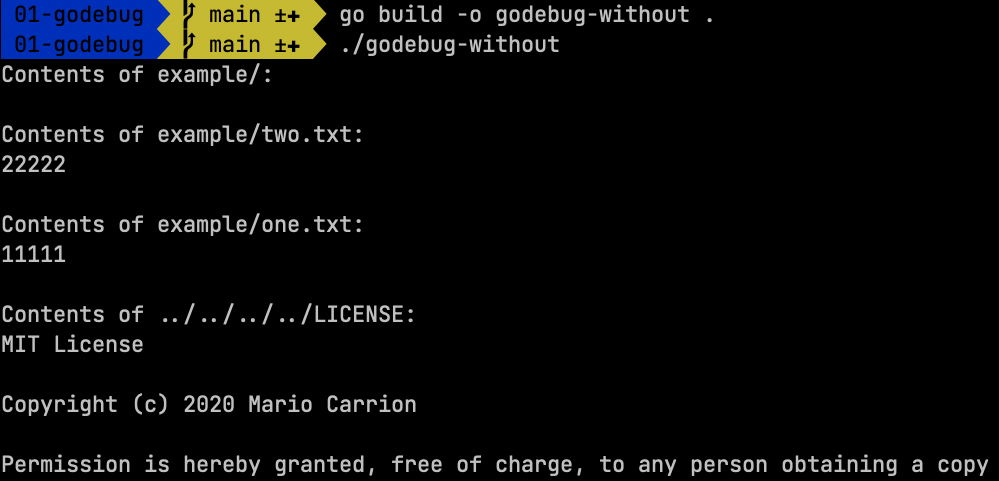

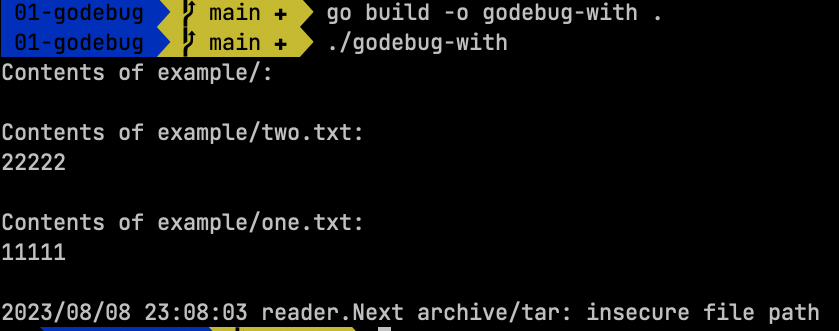

For example, let’s take a change added in Go 1.20 related to improving security when reading tar files that rejected insecure paths. The following code will behave differently depending on the value of GODEBUG=tarinsecurepath=X. Where X could be:

0to indicate enforcement of the new security change or1to ignore the security change and keep the behavior previous to Go 1.20.

Starting with Go 1.21, the go:debug directive allows us to explicitly set the behavior we want to enforce during compilation time, so adding this directive //go:debug tarinsecurepath=0 will reject the insecure paths when the GODEBUG variable is missing (however, keep in mind the GODEBUG variable still has higher priority).

To demonstrate this behavior, compile two versions of the binary, one including the go:debug directive and another not using the directive.

Without directive:

Without directive:

The output is different because the first one defaults to the <=1.20 behavior, and the second one enforces it.

2. Forward language compatibility

With this version, a bundled Go command and toolchain are included, meaning all the required tools needed for creating binaries will be part of that toolchain. The exciting part about this change is the new toolchain directive in go.mod and go.work, it lets us indicate the minimum required toolchain we want to use, telling the go tooling to download those toolchains when they are missing. Having at least Go 1.21 installed will allow us to compile previous and future versions without installing those in the first place.

3. New built-in functions: min, max and clear

Code for this example is available on Github.

min and max are what you imagine, they compare ordered types to determine which received value is either smaller or larger, respectively. The significant bit about this feature is understanding how ordered types work when comparing them and what types support those operators; please refer to the spec for more details.

The most basic example to demonstrate this new feature is to use a numeric type:

6 // `min`

7 fmt.Println("min(1,0,-1) =", min(1, 0, -1)) // Outputs: -1

8 fmt.Println(`min("c","cat","a") =`, min("c", "cat", "a"), "\n") // Outputs: "a"

9

10 // `max`

11 fmt.Println("max(10,100,3) =", max(10, 100, 3)) // Outputs: 100

12 fmt.Println(`max("dat","xaz","xyz") =`, max("dat", "xaz", "xyz"), "\n") // Outputs: "xyz"

clear receives arguments of either map or slice types. For slices, it zeroes all elements:

17 strs := make([]string, 2)

18 strs[0] = "hello"

19 strs[1] = "world"

20

21 fmt.Printf("%#v - %p\n", strs, &strs) // []string{"hello", "world"}

22

23 clear(strs)

24 fmt.Printf("%#v - %p\n\n", strs, &strs) // []string{"", ""}

and, in the case of maps, it deletes all the elements:

28 clients := make(map[string]int)

29 clients["mario"] = 99

30 clients["ruby"] = 89

31

32 fmt.Printf("%#v - %p\n", clients, &clients) // map[string]int{"mario":99, "ruby":89} - <address>

33 clear(clients)

34

35 fmt.Printf("%#v -%p\n", clients, &clients) // map[string]int{} - <address>

4. New log/slog package

This package is the addition of the proposal to include structured logging to the standard library. I covered this a few months ago, and most of that content still applies to this version, give the example code I showed you before a try by renaming the imported package golang.org/x/exp/slog to log/slog.

5. New slices package

Code for this example is available on Github.

This new package implements operations applicable to slices of any type. Examples of those operations include searching, cloning, sorting, and more. slices is in a way similar to existing third packages, such as github.com/samber/lo, but it only focuses on this kind of Go type.

The following code uses some of the functions include in this new package:

9 // slices.Index

10 indexNumbers := []int{100, 2, 99, 2}

11 fmt.Println(`indexNumbers := []int{100, 2, 99, 2}`)

12 fmt.Println("\tslices.Index(indexNumbers, 1) =", slices.Index(indexNumbers, 1)) // Returns -1, not found

13 fmt.Println("\tslices.Index(indexNumbers, 2) =", slices.Index(indexNumbers, 2), "\n") // Returns 1

14

15 // slices.Sort

16 sortNumbers := []int64{100, 2, 99, 2}

17 slices.Sort(sortNumbers)

18 fmt.Println(`sortNumbers := []int64{100, 2, 99, 2}`)

19 fmt.Println("\tslices.Sort(sortNumbers) =", sortNumbers, "\n")

20

21 // slices.Min + slices.Max

22 minMaxNumbers := []int{100, 2, 99, 2}

23 fmt.Println(`minMaxNumbers := []int{100, 2, 99, 2}`)

24 fmt.Println("\tslices.Max(minMaxNumbers)", slices.Max(minMaxNumbers))

25 fmt.Println("\tslices.Min(minMaxNumbers)", slices.Min(minMaxNumbers))

6. New maps package

Code for this example is available on Github.

This new package implements operations applicable to maps of any type. Similarly, the functions in this package are related to the samber/lo package I mentioned before.

The following code uses some of the functions include in this new package:

9 clients := map[string]int{

10 "mario": 99,

11 "ruby": 89,

12 }

13

14 fmt.Println(`clients := map[string]int{"mario": 99, "ruby": 89}`) // clients := map[string]int{"mario": 99, "ruby": 89}

15

16 // Clone

17 cloned := maps.Clone(clients)

18 fmt.Printf("\tcloned = %v, clients = %v\n", cloned, clients) // cloned = map[mario:99 ruby:89], clients = map[mario:99 ruby:89]

19 fmt.Printf("\tcloned = %p, clients = %p\n", &cloned, &clients) // cloned = <address 1>, clients = <address 2>

20

21 // Equal

22 fmt.Println("\n\tmaps.Equal(cloned, clients)", maps.Equal(clients, clients)) // maps.Equal(cloned, clients) true

23

24 // Copy

25 dest := map[string]int{"mario": 0, "other": -1}

26 fmt.Println("\n", `dest := map[string]int{"mario": 0, "other": -1}`) // dest := map[string]int{"mario": 0, "other": -1

27

28 maps.Copy(dest, clients)

29 fmt.Println("\tmaps.Copy(clients, dest) =", dest) // maps.Copy(clients, dest) = map[mario:99 other:-1 ruby:89]

30

31 // DeleteFunc

32 maps.DeleteFunc(dest, func(_ string, v int) bool {

33 if v > 90 {

34 return true

35 }

36

37 return false

38 })

39

40 fmt.Println("\tmaps.DeleteFunc(dest, v > 90) =", dest) // maps.DeleteFunc(dest, v > 90) = map[other:-1 ruby:89]

7. New context functions

Code for this example is available on Github.

This release includes four new functions, three of which allow you to start your context interaction differently.

With WithoutCancel, you can receive a non-canceled context even if the original context was canceled. This behavior is helpful in cases where you don’t want propagation up but still want to execute some logic. For example:

15 ctxTimeout, cancel := context.WithTimeout(ctx, time.Millisecond*10)

16 defer cancel()

17

18 ctxNoCancel := context.WithoutCancel(ctx)

19

20 select {

21 case <-ctxTimeout.Done():

22 fmt.Println("ctxTimeout.Error:", ctxTimeout.Err()) // ctxTimeout.Error: context deadline exceeded

23 close(ch)

24 }

25

26 <-ch

27

28 fmt.Println("context.WithoutCancel=Error:", ctxNoCancel.Err()) // context.WithoutCancel=Error: <nil>

WithDeadlineCause and WithTimeoutCause work similarly to their original counterparts, except in this case, you can explicitly indicate what caused the issue, ignoring the actual reason it failed. This logic is helpful when dealing with third-party APIs where you explicitly indicate the call failed instead of showing the concrete reason. For example:

34 ch = make(chan struct{})

35 ctx = context.Background()

36

37 ctxDeadline, cancelDeadline := context.WithDeadlineCause(ctx, time.Now().Add(time.Millisecond*10), fmt.Errorf("deadline cause"))

38 defer cancelDeadline()

39

40 select {

41 case <-ctxDeadline.Done():

42 fmt.Println("ctxDeadline.Error:", ctxDeadline.Err()) // ctxDeadline.Error: context deadline exceeded

43 close(ch)

44 }

45

46 <-ch

47

48 fmt.Println("context.WithDeadlineCause=Error:", ctxDeadline.Err()) // context.WithDeadlineCause=Error: context deadline exceeded

49 fmt.Println("context.WithDeadlineCause=Cause:", context.Cause(ctxDeadline)) // context.WithDeadlineCause=Cause: deadline cause

Finally, AfterFunc registers a function to call after a context is canceled. This function could trigger a new workflow after a context was canceled without affecting the original context cancelation workflow. For example:

55 ch = make(chan struct{})

56 ctx = context.Background()

57

58 ctxTimeout, cancelTimeout := context.WithTimeout(ctx, time.Millisecond*10)

59 defer cancelTimeout()

60

61 afterFunc := context.AfterFunc(ctxTimeout, func() {

62 fmt.Println("AfterFunc called") // AfterFunc called

63 close(ch)

64 })

65

66 select {

67 case <-ctxTimeout.Done():

68 fmt.Println("ctxTimeout.Error:", ctxTimeout.Err()) // ctxTimeout.Error: context deadline exceeded

69 afterFunc()

70 }

71

72 <-ch

73

74 fmt.Println("context.WithTimeout=Error:", ctxDeadline.Err()) // context.WithTimeout=Error: context deadline exceeded

8. New sync functions

Code for this example is available on Github.

There are three new functions to lazily initialize a value on first once, similar to the behavior implemented by sync.Once.

OnceValue and OnceValues return a function that initializes once either one or more values, respectively. For example:

12 onceString := sync.OnceValue[string](func() string {

13 time.Sleep(time.Second)

14 return "sync.OnceValue: computed once!"

15 })

16

17 fmt.Println(time.Now(), onceString()) // <current time> sync.OnceValue: computed once!

18 fmt.Println(time.Now(), onceString()) // <1 second + current time> sync.OnceValue: computed once!

19 fmt.Println(time.Now(), onceString()) // <current time> sync.OnceValue: computed once!

OnceFunc works similarly to the previous two functions, in this case it returns a function that would be called once. For example:

24 onceFunc := sync.OnceFunc(func() {

25 time.Sleep(time.Second)

26 fmt.Println("sync.OnceFunc: computed once!")

27 })

28

29 fmt.Println(time.Now()) // <1 second + current time>

30 onceFunc()

31 fmt.Println(time.Now()) // <current time>

32 onceFunc()

33 fmt.Println(time.Now()) // <current time>

34 onceFunc()

Conclusion

As I mentioned in the beginning, there are more features than I can list in this post, please read the release notes I bet you will find something useful. The winning new feature is the toolchain support, which will negate the need to have external applications locally handle go versioning. I can see a demise of goenv, just like what happened with dep back in the day when Go modules were released.

Great job Go team, I’m looking forward to Go 1.22!

Recommended reading

If you’re looking to sink your teeth into more Go-related topics I recommend the following books: