Disclaimer: This post includes Amazon affiliate links. If you click on one of them and you make a purchase I’ll earn a commission. Please notice your final price is not affected at all by using those links.

Welcome to another post part of the series covering Quality Attributes / Non-Functional Requirements, this time I’m talking about Testability.

What is Testability?

According to Software Architecture in Practice:

Testability refers to the ease with which software can be made to demonstrate its faults through (typically execution-based) testing

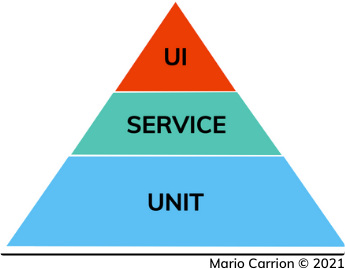

There’s also this concept called Test Automation Pyramid, introduced in Succeeding with Agile, used to define how much work we should do and how many tests we should implement depending on the layer we are trying to test.

This layering is similar to the one I discussed previously when I talked about Domain Driven Design.

The Test Automation Pyramid defines three layers, from top to bottom: UI, Service and Unit:

- UI: represents the User Interface, and it’s the top most layer, the one with the fewest tests,

- Service: represents Services like Use Cases, here we should write as many tests as needed, it’s medium size,

- Unit: indicates Unit Tests, the largest one, this is where we should focus on writing tests the most,

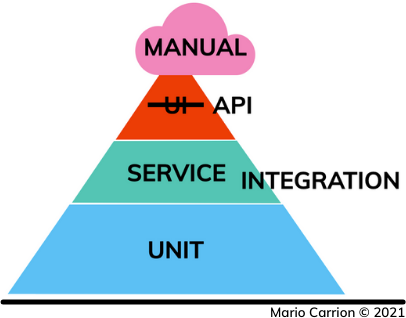

Because in the context of this post we are building Backend APIs I like to add a few things to the original pyramid:

- Manual, this is literally when there’s some human testing that needs to be done,

- Rename UI to API: because for the Backend the public APIs are the entry points to our application, like HTTP Endpoints; and

- Service: this would be Integration layer, where we interact with datastores.

Depending on some characteristics associated to the project we we are building, like deadlines or the available resources, there’s usually a compromise to be made to deliver the project on time, sometimes not implementing tests is that compromise.

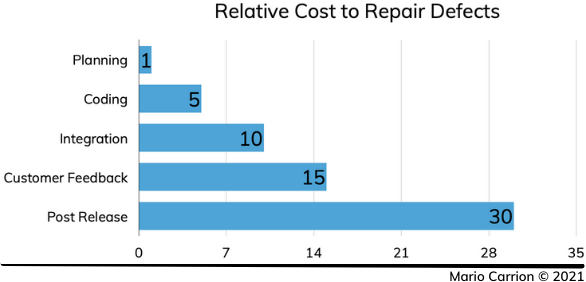

We should be really careful when making decisions like that where Testing is not considered a fundamental part of the software being written, this is better put in perspective if we refer to the paper The Economic Impacts of Inadequate Infrastructure for Software Testing published by the National Institute of Standards and Technology, which among other things measured the relative cost to repair defects depending on the phase of the software development where the repair has to happen:

What this means in practice is the further along the project is, the harder it will be repair defects if testing wasn’t built in the first place.

Unit Testing

The code used for this post is available on Github.

Idiomatic testing in Go consists of using Table Driven Tests, for example using the Priority type we have something like the following:

1func TestPriority_Validate(t *testing.T) {

2 t.Parallel()

3

4 tests := []struct {

5 name string

6 input internal.Priority

7 withErr bool

8 }{

9 {

10 "OK: PriorityNone",

11 internal.PriorityNone,

12 false,

13 },

14 {

15 "OK: PriorityLow",

16 internal.PriorityLow,

17 false,

18 },

19 {

20 "OK: PriorityMedium",

21 internal.PriorityMedium,

22 false,

23 },

24 {

25 "OK: PriorityHigh",

26 internal.PriorityHigh,

27 false,

28 },

29 {

30 "ERR: unknown value",

31 internal.Priority(-1),

32 true,

33 },

34 }

35

36 for _, tt := range tests {

37 tt := tt

38

39 t.Run(tt.name, func(t *testing.T) {

40 t.Parallel()

41

42 actualErr := tt.input.Validate()

43 if (actualErr != nil) != tt.withErr {

44 t.Fatalf("expected error %t, got %s", tt.withErr, actualErr)

45 }

46

47 var ierr *internal.Error

48 if tt.withErr && !errors.As(actualErr, &ierr) {

49 t.Fatalf("expected %T error, got %T", ierr, actualErr)

50 }

51 })

52 }

53}

- L4-34: Defines a slice of test cases,

- L5-7: Defines values used to indicate the

nameof the test, theinputand thewithErrto indicate the output, - L8-33: Defines the actual test cases,

- L5-7: Defines values used to indicate the

- L36-52: Runs the tests using subtests

- L37-40: Creates a local copy of

ttto allow passing in the value in the subtest in L39, - L42-45: Verifies the call to Validate with the test case input matches the expected test case output,

- L47-50: Similarly, it confirms the returned output matches the expected type.

- L37-40: Creates a local copy of

Integration Testing

In cases where code requires datastores and there’s a way to use docker for them I prefer using the ory/dockertest package, for example for postgresl.Task if we want to test the Create method something like the following could be implemented:

30func TestTask_Create(t *testing.T) {

31 t.Parallel()

32

33 t.Run("Create: OK", func(t *testing.T) {

34 t.Parallel()

35

36 task, err := postgresql.NewTask(newDB(t)).Create(context.Background(),

37 internal.CreateParams{

38 Description: "test",

39 Priority: internal.PriorityNone,

40 Dates: internal.Dates{},

41 })

42 if err != nil {

43 t.Fatalf("expected no error, got %s", err)

44 }

45

46 if task.ID == "" {

47 t.Fatalf("expected valid record, got empty value")

48 }

49 })

50

51 //...

If you notice this is not following the table-driven approach, this is intentional because in cases like this the code used for interacting with the datastore already has a concrete goal (it uses Repository Pattern) so there’s no logic other than actually executing the corresponding calls need to the communicate with the datastore, which in this example is executing the SQL INSERT command.

Conclusion

Testability as a Quality Attribute is fundamental for any software project, specially those meant to last longer, thankfully in Go testing support is already included in the standard library and only a few packages could be needed to enhance the testing experience, like ory/dockertest, dnaeon/go-vcr and h2non/gock.

Recommended reading

If you’re looking to sink your teeth into more Software Architecture, specifically regarding Testability I recommend the following content:

- Book: Software Architecture in Practice (SEI Series in Software Engineering) 3rd Edition

- Book: Succeeding with Agile: Software Development Using Scrum 1st Edition

- Post: Go Tool for mocking and testing: counterfeiter

- Post: Go Package for Equality: github.com/google/go-cmp

- Post: Go Package for Mocking HTTP Traffic: github.com/h2non/gock

- Post: Go Package for Testing HTTP interactions: github.com/dnaeon/go-vcr