Disclaimer: This post includes Amazon affiliate links. If you click on one of them and you make a purchase I’ll earn a commission. Please notice your final price is not affected at all by using those links.

Welcome to another post part of the series covering Quality Attributes / Non-Functional Requirements, this time I’m talking about a Caching Pattern to improve Scalability called: Write-Through.

What is Caching?

Caching is storing and using a precomputed value for expensive calculations, in Microservices in Go: Caching using memcached I covered two other approaches to consider before starting to use caching, in this post I’m assuming you’re familiar with those before investing time on implementing caching.

How does the Caching Pattern Write-Through work?

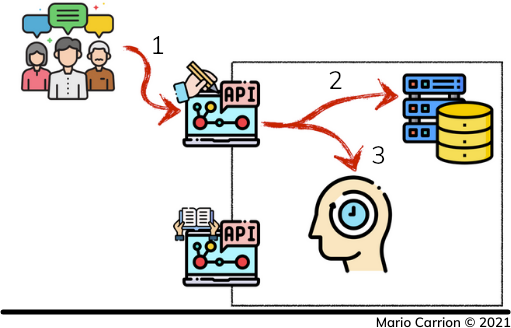

The Write-Through Pattern works by sitting next to a Write-Only API, where the expected data that will be accessed through a Read-Only API is cached when the write request happens. This means the following:

- Customers request Write-Only API,

- Data is updated in the persistent data store, and

- Data is updated in the cache data store.

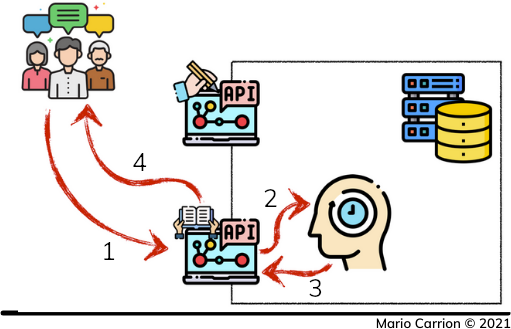

When customers retrieve the data using a Read-Only API the following happens:

- Customers request Read-Only API,

- API requests data from cache,

- Cached values are returned back to our Read-Only API, and finally

- Information is made available to our Customers.

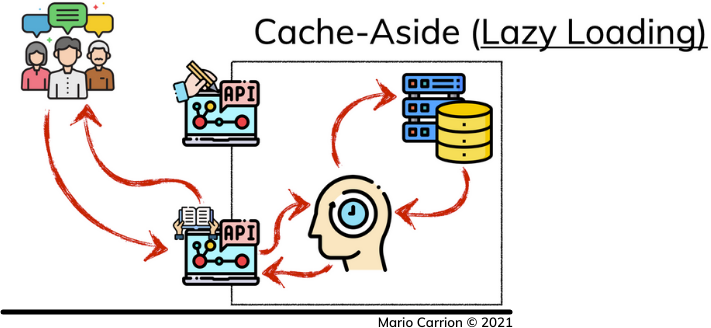

When using the Write-Through Caching Pattern one important thing to consider is the time to live of the cached values, also called eviction time, typically this pattern is used together with the Cache-Aside Caching Pattern to allow adding expiration to cached values to avoid overwhelming our caching data store for those cases where the data is not frequently used.

How can the Write-Through Caching Pattern be implemented in Go?

The code used for this post is available on Github.

Similar to the implementation of the Cache-Aside Caching Pattern, I will use the Decorator pattern to keep the same API in the datastore type but making the necessary calls to cache the values during write.

As a concrete example, in the To-Do Microservice a new type Task, in internal/memcached is added, this memcached.Task type will receive the persistent data store together with the memcache.Client:

1type TaskStore interface {

2 Create(ctx context.Context, description string, priority internal.Priority, dates internal.Dates) (internal.Task, error)

3 Delete(ctx context.Context, id string) error

4 Find(ctx context.Context, id string) (internal.Task, error)

5 Update(ctx context.Context, id string, description string, priority internal.Priority, dates internal.Dates, isDone bool) error

6}

7

8func NewTask(client *memcache.Client, orig TaskStore) *Task {

9 return &Task{

10 client: client,

11 orig: orig,

12 expiration: 10 * time.Minute,

13 }

14}

You will notice both memcached.TaskStore and memcached.Task implement the same methods, this is to allow wrapping the persistent data store and to allow using it as the argument in our service.Task.

Our concrete Write-API implementation is called via three methods, first the Create method:

1func (t *Task) Create(ctx context.Context, description string, priority internal.Priority, dates internal.Dates) (internal.Task, error) {

2 task, _ := t.orig.Create(ctx, description, priority, dates) // XXX: error omitted for brevity

3

4 setTask(t.client, task.ID, &task, t.expiration) // Write-Through Caching

5

6 return task, nil

7}

Second one is the Delete method:

1func (t *Task) Delete(ctx context.Context, id string) error {

2 _ = t.orig.Delete(ctx, id) // XXX: error omitted for brevity

3

4 deleteTask(t.client, id)

5

6 return nil

7}

And third one is the Update method:

1func (t *Task) Update(ctx context.Context, id string, description string, priority internal.Priority, dates internal.Dates, isDone bool) error {

2 // XXX: errors omitted for brevity

3

4 _ = t.orig.Update(ctx, id, description, priority, dates, isDone)

5

6 deleteTask(t.client, id) // Write-Through Caching

7

8 task, _ := t.orig.Find(ctx, id)

9

10 setTask(t.client, task.ID, &task, t.expiration)

11

12 return nil

13}

Finally the only read-only method, Find could be implemented by only delegating the call to the persistent data store, but in our case we are using the Cache-Aside Caching Pattern to allow adding eviction times to the initial Write-Only calls:

1func (t *Task) Find(ctx context.Context, id string) (internal.Task, error) {

2 // XXX: errors omitted for brevity

3

4 var res internal.Task

5

6 _ = getTask(t.client, id, &res)

7

8 res, _ := t.orig.Find(ctx, id) // Cache-Aside Caching

9

10 setTask(t.client, res.ID, &res, t.expiration)

11

12 return res, nil

13}

By writing this decorator type we have the flexibility to keep the same internal Go API we previously implemented, with the only change to add will be when instantiating the service in the main package:

1 repo := postgresql.NewTask(conf.DB)

2 mrepo := memcached.NewTask(conf.Memcached, repo)

3

4 // ...

5

6 svc := service.NewTask(conf.Logger, mrepo, msearch, msgBroker)

Conclusion

The Write-Through pattern, similar to the Cache-Aside pattern, is meant to improve Scalability of our services by reducing the amount of time it takes to return values back to our customers, the key difference is when the caching happens, during Write-Only and the reason behind doing that, which will be in cases where we know a write could lead to immediate requests of the Read-Only APIs; for example a News Feed could cache a brand new article after publishing it, this way those clients that are consuming the feed real time will be able to access it right away with little to no delay.

Recommended reading

If you’re looking to sink your teeth into more Software Architecture and Caching-related topics I recommend the following links:

- Quality Attributes / Non-Functional Requirements

- Software Architecture in Go: Cache-Aside, Cloud Design Pattern for Scalability

- Microservices in Go: Caching using memcached

- Hands-On Software Architecture with Golang

- Building Evolutionary Architectures: Support Constant Change - Post