Disclaimer: This post includes Amazon affiliate links. If you click on one of them and you make a purchase I’ll earn a commission. Please notice your final price is not affected at all by using those links.

Welcome to another post part of the series covering Quality Attributes / Non-Functional Requirements, this time I’m talking about a Cloud Design Pattern to improve Scalability called: Cache-Aside.

What is Caching?

Caching is storing and using a precomputed value for expensive calculations, in Microservices in Go: Caching using memcached I covered two other approaches to consider before starting to use caching, in this post I’m assuming you’re familiar with those before investing time on implementing caching.

How does the Caching Pattern Cache-Aside work?

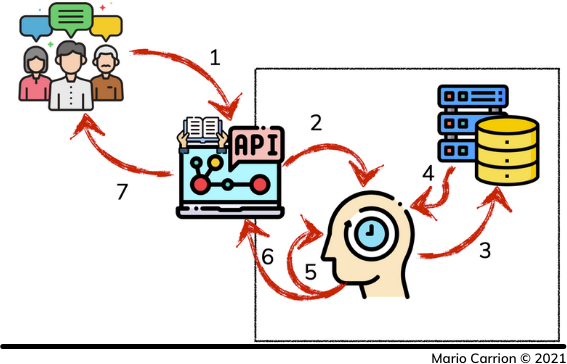

The Cache-Aside Pattern works by sitting in between a Read-Only API and the data meant to be returned back to our client. The idea consists in returning the data immediately when it’s available in the cache, and in those cases where the values don’t exist, for example in the very first request, they are set in the cache by first retrieving the information from the persistent data store, setting the value in the cache and finally replying back with the cached values.

The very first time a request happens a Cache-Miss will occur and the following steps happen:

- Customers request Read-Only API,

- API requests data from cache,

- Cache data is not found, therefore it’s fetched from persistent data store,

- Data store returns requested values,

- Values are set in the caching layer,

- Cached values are returned back to our Read-Only API, and finally

- Information is made available to our Customers.

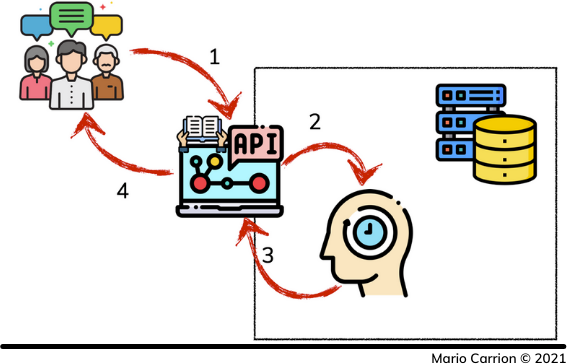

Because the data is already in the cache subsequent requests to retrieve the same values access the cache directly and the steps are simplified:

- Customers request Read-Only API,

- API requests data from cache and Cache data is found,

- Cached values are returned back to our Read-Only API, and finally

- Information is made available to our Customers.

How can the Cache-Aside Pattern be implemented in Go?

The code used for this post is available on Github.

I like implementing the Cache-Aside pattern in Go using the Decorator pattern, that way the API defined in the datastore type is kept the same and only the needed Cache-Aside logic is built.

Using the To-Do Microservice example I’m implementing this Cache-Aside pattern to speed up searching results, there is new package called memcached that defines a new type called Task that implements the same methods defined by its equivalent elasticsearch.Task type.

The memcached.Task type defines the memcached client, as well as a link to the original datastore using an interface type:

// Task ...

type Task struct {

client *memcache.Client

orig Datastore

}

type Datastore interface {

Delete(ctx context.Context, id string) error

Index(ctx context.Context, task internal.Task) error

Search(ctx context.Context, args internal.SearchArgs) (internal.SearchResults, error)

}

Because I’m only interested in caching search results the other two methods delegate the calls to the original data source:

func (t *Task) Index(ctx context.Context, task internal.Task) error {

return t.orig.Index(ctx, task)

}

func (t *Task) Delete(ctx context.Context, id string) error {

return t.orig.Delete(ctx, id)

}

The real implementation of this pattern happens in the Search method where the steps I mentioned above are defined:

func (t *Task) Search(ctx context.Context, args internal.SearchArgs) (internal.SearchResults, error) {

key := newKey(args)

item, err := t.client.Get(key)

if err != nil {

if err == memcache.ErrCacheMiss { // 3. Cache data is not found, therefore it’s fetched from persistent data store,

res, err := t.orig.Search(ctx, args) // 4. Data store returns requested values,

if err != nil {

return internal.SearchResults{}, internal.WrapErrorf(err, internal.ErrorCodeUnknown, "orig.Search")

}

var b bytes.Buffer

if err := gob.NewEncoder(&b).Encode(&res); err == nil { // 5. Values are set in the caching layer,

t.client.Set(&memcache.Item{

Key: key,

Value: b.Bytes(),

Expiration: int32(time.Now().Add(25 * time.Second).Unix()),

})

}

return res, err // 6. Cached values are returned back to our Read-Only API, and finally

}

return internal.SearchResults{}, internal.WrapErrorf(err, internal.ErrorCodeUnknown, "client.Get")

}

// 3. Cache data is found

var res internal.SearchResults

if err := gob.NewDecoder(bytes.NewReader(item.Value)).Decode(&res); err != nil {

return internal.SearchResults{}, internal.WrapErrorf(err, internal.ErrorCodeUnknown, "gob.NewDecoder")

}

return res, nil

}

Conclusion

The Cache-Aside pattern is meant to improve Scalability of our services by reducing the amount of time it takes to return values back to our customers, make sure you define automatic eviction times to avoid having stale data, in future posts I will cover other Caching Patterns that take a different approach with the same end goal.

Recommended reading

If you’re looking to sink your teeth into more Software Architecture-related topics I recommend the following books:

- Agile Software Development, Principles, Patterns, and Practices

- Building Evolutionary Architectures: Support Constant Change

- Hands-On Software Architecture with Golang

- Microservices in Go: Caching using memcached

- Software Architecture in Go: Write-Through Caching Pattern for Scalability